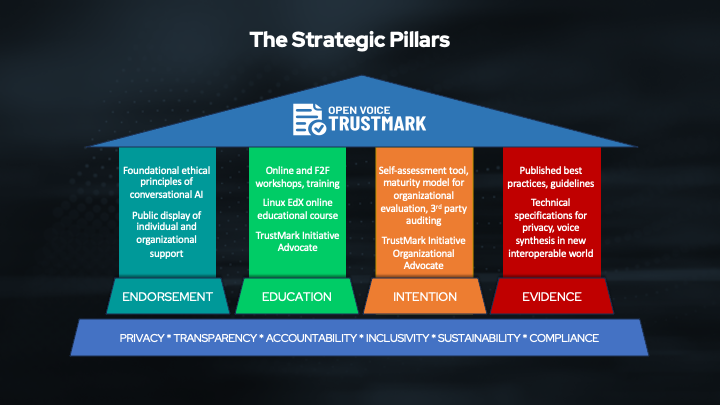

The Trustmark’s strategic pillars encompass endorsement, education, intention, and evidence. By garnering support for ethical principles, providing educational resources, facilitating self-assessment tools, and promoting best practices, the Trustmark aims to mitigate risks associated with AI, including privacy breaches, security threats, performance issues, lack of control, economic ramifications, and societal implications. Through a nuanced understanding of technology and business risks, the Trustmark seeks to empower organizations and individuals with risk mitigation strategies tailored to their needs.

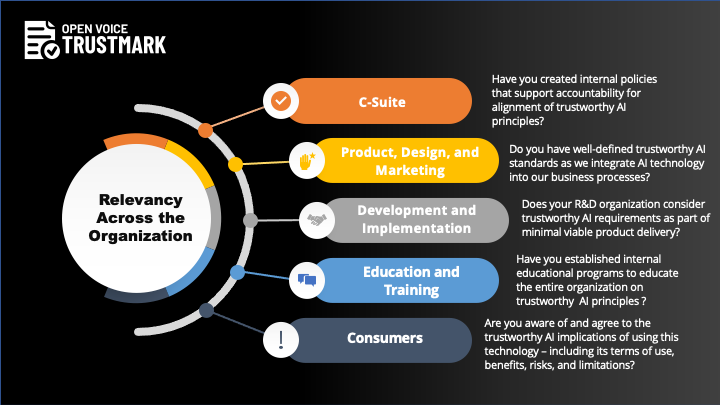

Central to the Trustmark’s framework are six key principles: privacy, transparency, accountability, inclusivity, sustainability, and compliance. These principles guide stakeholders across the AI lifecycle – from the C-suite to product design, development, education, training, and consumer engagement – in ensuring that AI solutions align with societal values, legal requirements, and best practices.